Data science simplified part 7: Also i tried to use.

Quick-r Multiple Regression Regression Linear Regression Regression Analysis

Lm(logdata$x ~ logdata$b3, data = logdata) but it did not work because it fits the linear model.

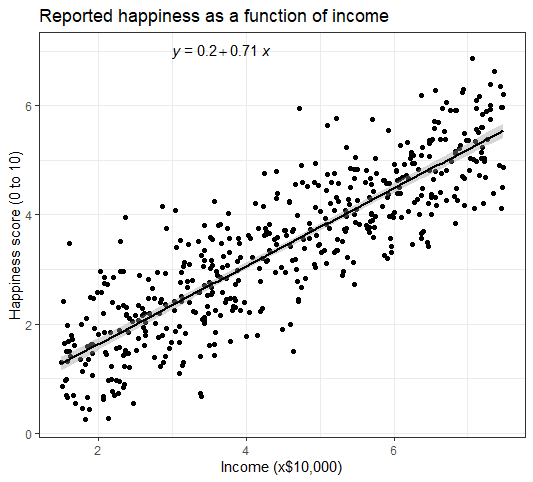

Log linear model in r. In other words, the interpretation is given as an expected percentage change in y when x increases by some percentage. Introduction to linear model in r. In the last few blog posts of this series, we discussed simple linear regression model.

Loglinear models model cell counts in contingency tables. A statistical or mathematical model that is used to formulate a relationship between a dependent variable and single or multiple independent variables called as, linear model in r. You can also get a log(x) model with glm( target ~ covariates, family =quasipoisson).

X^2 df p (> x^2) likelihood ratio 21.73551 6. Loglm(~ admit + dept + gender + admit:dept + dept:gender, data=ucbadmissions) call: Loglm (formula = ~admit + dept + gender + admit:dept + dept:gender, data = ucbadmissions) statistics:

$r^2 = \frac{\text{explained variation of the model}}{\text{total variation of the model}}$ Common choices in this setting are ^‚x 1 = ‚^y 1 = 0 (r default) or ‚^x r = ‚^y c = 0. (prisoner's race)(victim's race) > # help(loglin) > racetable1 = rbind(c(151,9), + c(63,103)) > test1 = chisq.test(racetable1,correct=f);

The lm() function takes in two main arguments, namely: One measure very used to test how good is your model is the coefficient of determination or r². The additive model would postulate that the arrival rates depend on the level

The criteria is that the variables involved in the formation of model meet certain assumptions as necessary prerequisites prior. Exponentiate the coefficient, subtract one from this number, and multiply by 100. How to test if your linear model has a good fit?

Fernando has now created a better model. We have demonstrated this in the first homework, and it can be easily demonstrated algebraicly. They are appropriate when there is no clear distinction between response and explanatory variables, or there are more than two responses.

We discussed multivariate regression model and methods for selecting the right model. Model = nls(logdata$x ~ logdata$b3) but it gives me errors. One consequence of this that for the independence model, the number of parameters to be estimated is r+c¡1.

Log(u)= const+ b1r +b2c +b3rc They are appropriate when there is no clear distinction between response and explanatory There is ‚, r¡1 ‚x i s and c¡1 ‚y j s.

All variables in a loglinear model are essentially “responses”. The null model, the additive model and the saturated model. Here are the model and results:

Any valid constraint must satisfy ‚^x r = log Why the interaction terms are really log odds ratios i have also claimed that interaction coefficients in the loglinear models correspond to log odds ratios. Log( sd(x)) is not equal to sd(log(x)).

The null model would assume that all four kinds of patients arrive at the hospital or health center in the same numbers. They model the association and interaction patterns among categorical variables. Let’s start with a saturated model for the 2x2 table:

As in the anova setting, there is no unique approach for dealing with constraints. This measure is defined by the proportion of the total variability explained by the regression model. The r script is available on this blog’s code page, and it can be opened with any text editor.

I am trying to fit a regression model, as the plot says the relation is log. The logarithm of ap is regressed against a quadratic time trend and a bunch of sine and cosine terms of the form sin(2πit) and cos(2πit); You can probably find a discussion on the issues and relative merits of these two approaches on crossvalidated.com

They’re a little different from other modeling methods in that they don’t distinguish between response and explanatory variables. Specific model of conditional independence. The function used for building linear models is lm().

Now that we have seen the linear relationship pictorially in the scatter plot and by computing the correlation, lets see the syntax for building the linear model.

Confidence And Prediction Intervals Explained With A Shiny App Adi Sarids Personal Blog Predictions Standard Deviation Linear Regression

R Linear Regression Model Lm Function In R With Code Examples - Datacamp

Linear Regression Vs Logistic Regression Vs Poisson Regression Marketing Distillery Data Science Learning Linear Regression Data Science

Comparing Dependent Variable Model Transformations By Leave-one-out Cross-validation In R Variables Log Meaning Sum Of Squares

R Linear Regression Model Lm Function In R With Code Examples - Datacamp

R Linear Regression Tutorial Covers Singlemultiple Linear Regressioncurvilinear Regressionworking With Linear Regressi Linear Regression Regression Tutorial

Generalised Linear Models In R Linear Data Analysis Model

First Steps With Non-linear Regression In R R-bloggers Linear Regression Regression First Step

A Pragmatic Way Of Generating Prediction Intervals From A Generalized Linear Model With A Quasi-likelihood R Linear Relationships Pragmatics Standard Deviation

Introduction To Econometrics With R Data Science Quadratics Null Hypothesis

Nonlinear Regression Essentials In R Polynomial And Spline Regression Models - Articles - Sthda Polynomials Regression Regression Analysis

Plotting Lm And Glm Models With Ggplot Rstats Logistic Regression Linear Regression Confidence Interval

Linear Regression 3 Ways In Julia Boss-levelcom Linear Regression Regression Linear

R Linear Regression Model Lm Function In R With Code Examples - Datacamp

Linear Regression Model Explanation Linear Regression Regression Math Foldables

Figure 1 Hierarchical Diagram Of A Multiple Linear Regression Model With Three Linear Regression Regression Anova

Logistic Regression From Bayes Theorem Count Bayesie Logistic Regression Theorems Regression

Shiny User Showcase Data Science Regression Linear Regression

Linear Regression In R An Easy Step-by-step Guide

إرسال تعليق